Training Evaluation: A Guide to Measuring Program Success

Many businesses train their staff to enhance productivity. But how can you tell if it’s successful? The ideal approach is to evaluate your training programs.

In this article, you’ll learn what training evaluation is and the benefits it brings. It will also shed light on the best evaluation models and tools you can use to assess your program’s effectiveness.

What Is Training Evaluation

Training evaluation is a structured approach to assess how well training programs achieve their goals, support business needs, and drive long-term impact. It helps identify skill gaps, measure improvements in employee performance, and refine training strategies using real data.

Advances in AI have made training evaluation more efficient. AI-powered analytics can now process large volumes of training data, detect trends, and provide predictive insights, allowing organizations to refine their learning strategies in real time.

Why Evaluate Training Programs

With 49% of learning professionals reporting a skills crisis, addressing training gaps is more critical than ever. Companies that prioritize career-driven learning are better equipped to develop the right skills and adapt to business needs. Training evaluation facilitates its effectiveness by showing what worked and what didn’t, guiding adjustments to future training. It also checks if training helps employees perform better.

Benefits of training evaluation

Although evaluating training effectiveness requires resources, its benefits outweigh the costs. But its main value is in enabling companies to build well-structured, impactful training programs. A thorough evaluation delivers tangible benefits to your eLearning process:

- Keep learning relevant. 60% of workers will need training before 2027, yet only half currently have access to sufficient opportunities. A solid evaluation process helps spot weak areas early, so you can tweak the program in time.

- Cut costs and increase profitability. Companies with strong career development programs are 75% more confident in their profitability. A well-evaluated training strategy helps allocate resources efficiently, reducing hiring costs by fostering internal mobility.

- Increase employee engagement. 22% of organizations are prioritizing increasing learner usage of training programs. A systematic training evaluation process can help pinpoint where learners lose interest, allowing you to enhance your content with interactive features for increased participation.

What to Evaluate in Training Programs

To make your training evaluation structured and aligned with training objectives, ask five questions about your programs.

1. Are employees gaining the right knowledge and skills?

The priority is ensuring that employees learn, which means identifying skill gaps and refining training materials. To measure training effectiveness in this regard, you can:

- Conduct pre- and post-training assessments to compare employees’ understanding before and after the course.

- Give learners practical exercises and scenario-based tasks to see if they can apply what they’ve learned.

- Track assessment scores and certifications, if a course includes exams, to verify whether employees meet the expected knowledge level or still need to ‘level up.’

2. Are employees actively participating?

High engagement typically translates to better learning outcomes, while low engagement signals a need to adjust the format or content. Engagement metrics in training evaluation involve:

- Completion rates. If many employees drop out, the course might be too long, complex, or irrelevant.

- Active participation. Metrics like quiz attempts, involvement in discussions, and time spent on each training session are indicative of engagement levels.

- Training experience and satisfaction. Post-training surveys reveal whether employees found the course relevant, useful, and engaging — or boring and irrelevant.

3. Are employees applying what they learned?

If employees struggle to apply their newfound knowledge, it may indicate a need for follow-up training or a redesign of the training approach. Here are some key evaluation methods:

- Training managers’ and peer feedback, when direct supervisors can assess whether employees demonstrate improved skills in their daily tasks.

- Workplace application tracking — observing how employees use new skills on the job over time.

- Comparing metrics like sales close rate, productivity, and quality of work before and after training provides quantifiable proof of impact.

4. Are training programs contributing to your goals?

If a training program fails to produce business value, adjustments may be needed in content, delivery, or audience targeting. To identify areas for improvement, focus on tracking these aspects:

- Increased productivity and/or compliance, as well as a reduction in errors, determine if the training contributes to smoother workflows and greater precision.

- Fewer customer complaints and/or safety incidents reflect a positive change if you’re designing training to improve operational issues.

- Return on Investment (ROI) measures and weighs costs against measurable business benefits, such as increased revenue, cost savings, or customer satisfaction scores.

5. Are training benefits sustained over time?

A one-time assessment isn’t sufficient — training evaluation is a systematic process of tracking long-term knowledge retention and performance improvements. This includes:

- Testing employees 3-6 months after training: this reveals whether knowledge does or doesn’t stick.

- Career growth and internal mobility: when employees progress in their careers, it can indicate the training was effective.

- Training adaptability: this implies that the training evolves to meet changes in your industry’s trends.

Indicators of Training Effectiveness

Before starting the evaluation process, it’s important to define success by measuring training effectiveness in key areas. To do this, look at key areas where training should drive improvements. Higher scores in these areas indicate that the program is achieving its intended outcomes.

- Knowledge retention and application. Training is only effective if employees absorb and use new information. If they struggle to apply what they’ve learned, it signals a gap. Measuring retention and application helps refine content to ensure that learning leads to actual workplace improvements.

- Learner engagement and completion rates. Active participation, attendance, and task completion indicate whether employees are engaged. High engagement boosts skill adoption and job performance, while low participation may point to content or delivery issues.

- Trainee satisfaction and behavioral changes. Training should drive real shifts in work habits. Passing an assessment means little if employees don’t apply the gained skills. Tracking behavioral changes shows whether training delivers actionable insights that improve efficiency.

- Performance and business impact. Training should enhance key metrics like sales, customer service, and error reduction. If it doesn’t contribute to business growth or operational efficiency, its value is questionable. Analyzing business impact ensures that training aligns with organizational goals.

- Training ROI and career growth. The financial training outcomes should outweigh its costs through increased efficiency, reduced expenses, or higher revenue. A strong ROI justifies training investments and supports informed decisions about future programs.

Training Evaluation Models: A Guide to the Best

To evaluate training programs more systematically, you can use specific training evaluation models and adapt training strategies accordingly. We’ve identified 5 evaluation models that stand out as being the most popular and trusted over the years.

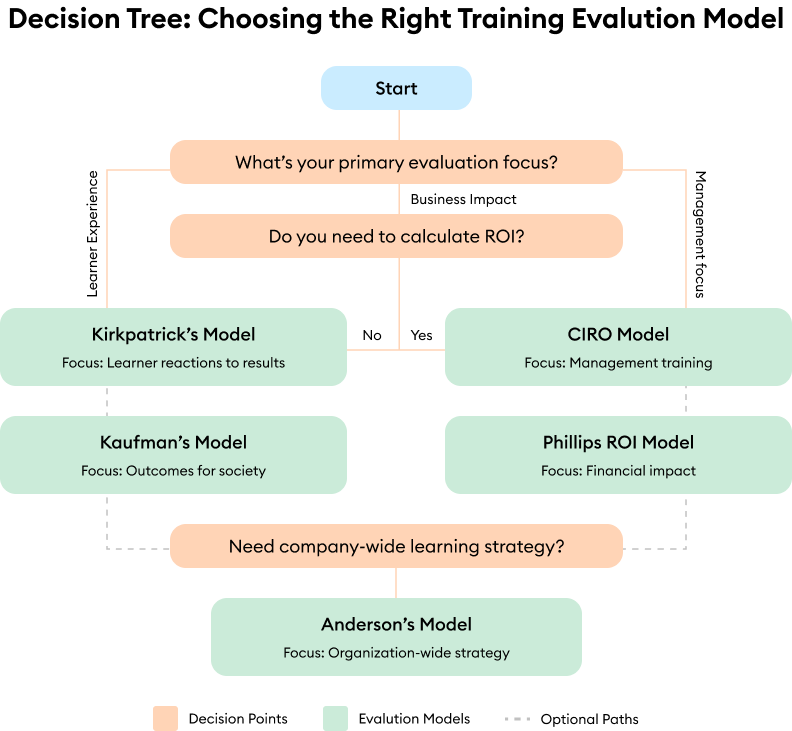

But how do you know which model fits your needs? It all depends on your focus — measuring learner experience, business impact, or management training. Here’s a quick decision tree to help you choose the right training evaluation model:

This gives you a quick overview, but let’s take a closer look at each model.

Kirkpatrick’s four-level training evaluation model

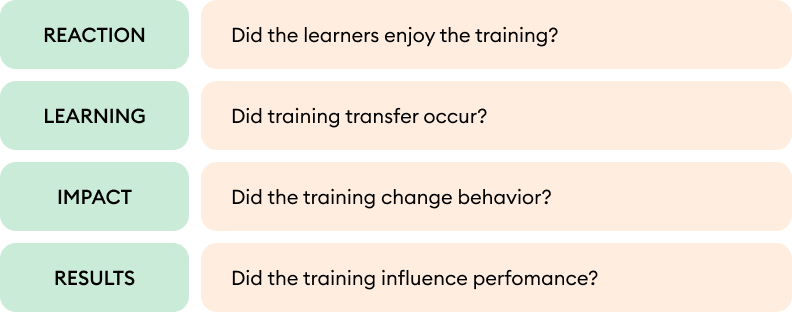

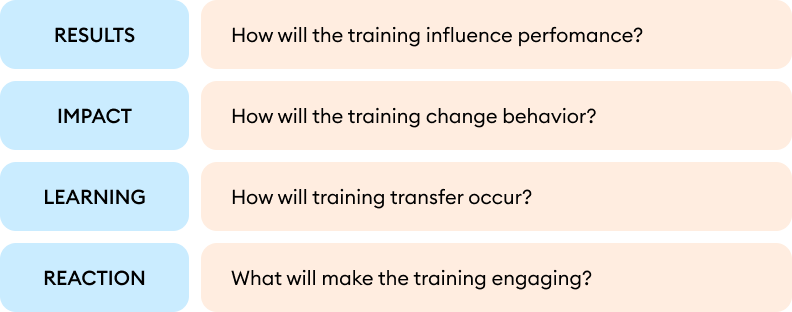

The Kirkpatrick Model is one of the oldest and most well-known training evaluation frameworks. Even after 60 years, it remains widely used. In 2010, Jim and Wendy Kirkpatrick refined it, creating the New World Kirkpatrick Model, which focuses more on business objectives and long-term impact. The model evaluates training across four levels: Reaction, Learning, Impact, and Results. Let’s break down each one.

Level 1: Reaction

Once learners complete your course, assess their reactions. Have them complete a survey or conduct interviews, asking questions to assess engagement, relevance, and commitment to applying the training in the workplace:

- How satisfied are you with the training experience?

- Did the training content meet your expectations?

- Was the training relevant to your job role? If so, how?

- Did you learn anything new?

- How confident are you in applying the new knowledge in your daily tasks?

For more nuanced feedback, consider a Likert scale survey, which goes beyond simple yes/no answers and can enrich your evaluation insights.

Level 2: Learning

This level evaluates how well participants acquired knowledge and skills, gained confidence, and developed the intent to apply learning in their roles. For this level, assessment methods include:

- Pre- and post-training assessments to measure knowledge growth.

- Practical exercises to gauge skill application.

- Self-assessments and peer feedback to confirm the learner’s readiness to execute real-world tasks.

Level 3: Behavior

Observe any changes in an employee’s behavior after completing the training with 360-degree feedback. This method collects input from colleagues, managers, and direct reports to give a comprehensive picture of how someone applies their skills at work. It’s like a survey that helps compare self-perception with how others see them, making it easier to spot strengths and areas for improvement. Also, сheck to see if workplace conditions like leadership support and available resources help or block the application of new knowledge.

Level 4: Results

After all, better results are the primary goal of corporate training. But instead of focusing solely on financial ROI, this stage evaluates Return on Expectations (ROE) — the extent to which training achieves intended business outcomes. Here are the key steps to assess whether training meets strategic expectations:

- Work with stakeholders to set clear, measurable indicators linked to strategic goals like productivity, improved compliance, or increased customer retention.

- Use post-training evaluations at multiple intervals (e.g., 3, 6, and 12 months) to measure sustained improvements in employee performance and business outcomes.

- Incorporate manager feedback, customer insights, and operational assessments to capture the full picture of effectiveness.

- Check if leadership and department heads see tangible improvements that align with their initial expectations.

- Use evaluation findings to adjust training programs, optimize delivery methods, and implement targeted follow-ups for continuous improvement.

Although the Kirkpatrick Model is effective, it has some limitations:

- Limited application. It can only tell you whether your training works or not. That means if you use this model, you won’t get data that helps improve the course per se.

- Questionable structure. The Kirkpatrick Model assumes that each level naturally leads to success at the next, but there’s no solid proof that it always works that way.

Don Kirkpatrick himself recognized these issues and suggested a better way of using his method: begin with the end in mind. Working backward through the four levels during the design phase will allow you to tailor your training program to achieve your specific goals.

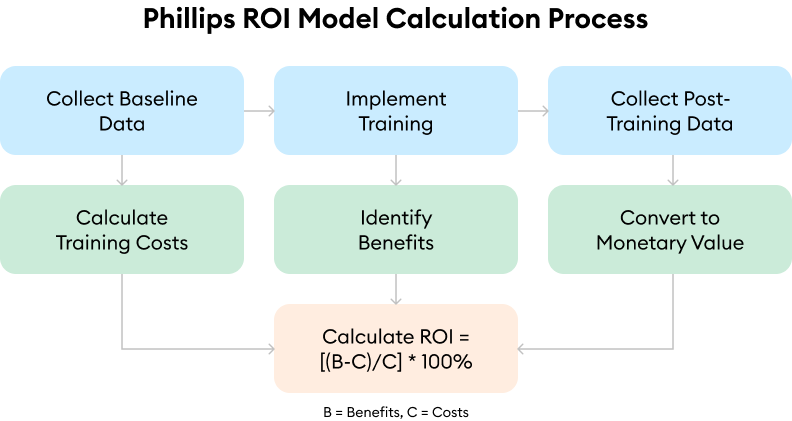

Phillips ROI model

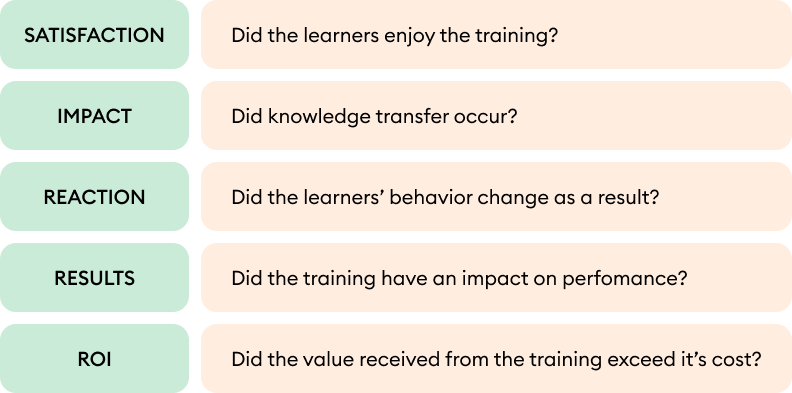

The Phillips ROI model mirrors the levels of the Kirkpatrick Model, with a crucial addition at the end — return on investment (ROI).

Unlike the Kirkpatrick Model, this model can let you know if investing money in a training program was a good decision. The process is illustrated below:

The key steps to evaluate training using the Phillips ROI model are:

- Collect baseline data

Before training begins, gather pre-training performance metrics to establish a benchmark. This might include productivity levels, quality metrics, employee engagement scores, or financial indicators.

- Collect post-training data

After you implement your training, use control groups, trend analysis, or stakeholder feedback. That way, you’ll separate the impact of training from other factors like market shifts, process changes, or seasonal demand. Then, compare this data with baseline metrics to identify changes.

- Identify total training costs

To calculate ROI, account for all direct and indirect costs of the training program, including spending on:

- Course development — instructional designers, content creation, and materials.

- Training delivery — costs of trainers, venues, and/or platforms.

- Investment of employee time — salaries during training sessions and lost working hours.

- Technology and administration — software, reporting tools, and post-training support.

- Identify benefits and convert them into monetary value

Compare your data from pre- and post-training evaluations. You can then translate performance improvements into financial terms by identifying key benefits, such as:

- Increased productivity like output per employee, project completion time, or efficiency gains.

- Quality improvements: fewer errors, higher compliance rates, and reduced rework.

- Higher customer satisfaction and retention through survey scores and repeat business.

- Reductions in operational expenses, employee turnover, and training repetition.

Then assign monetary values to these benefits using real business data. For example, if productivity increases by 5%, estimate the additional revenue that it generated. Or if turnover decreases, calculate the savings realized on hiring and onboarding costs related to replacing those employees who would otherwise have left the company.

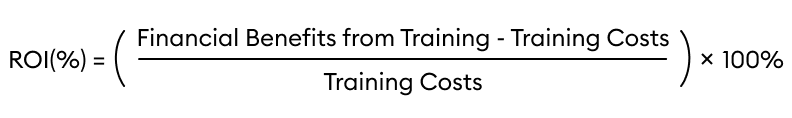

- Calculate ROI

Once you determine benefits and costs, calculate ROI using this formula:

A positive ROI indicates that training generated more value than it cost, while a negative ROI suggests the need for program adjustments.

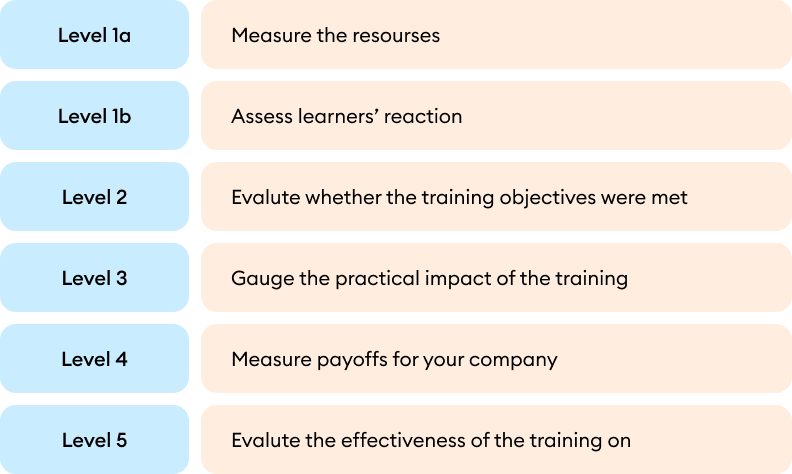

Kaufman’s five levels of evaluation

Building on the Kirkpatrick Model, Roger Kaufman introduced a five-level comprehensive framework. He split the first level into two parts, combined Kirkpatrick’s second and third levels into ‘micro’ levels, and introduced a fifth level to assess outcomes for both customers and society.

Here’s how to use Kaufman’s five levels of evaluation:

Level 1a: Input

Track the resources, like time and money, that were invested in your training program.

Level 1b: Process

Gauge how participants felt about the course.

Level 2: Acquisition

To assess the specific benefits of your training, check to see if it meets the goals for individual learners or small groups. This involves finding out if your learners have gained new knowledge and skills.

Level 3: Application

Assess learners’ ability to apply their newly acquired knowledge and skills to their work.

Level 4: Organizational payoffs

Measure payoffs for your company as a whole. A payoff can be an improvement in employee performance, a reduction in costs, or increased profits.

Level 5: Societal outcomes

At the final level, you are to evaluate the impact that your course has on what Kaufman calls ‘mega-level clients.’ By these, he means business clients or society.

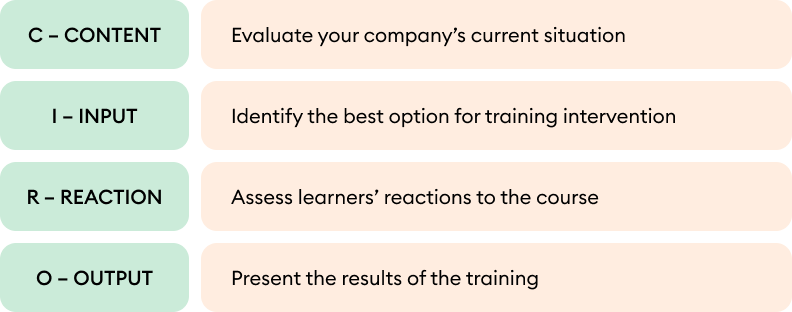

The CIRO model

CIRO stands for Context, Input, Reaction, and Output. This model is designed for evaluating management training. So, if you want to assess management courses, it’s an ideal choice.

Stage 1: Content

Identify all the factors that could influence the training results and pinpoint where your organization falls short in performance. As a result, you’ll have a list of needs that should be organized according to the following two levels:

Intermediate objectives

As immediate objectives can help change employees’ behavior, they usually involve the acquisition of new skills and knowledge from training or shifts in their attitudes.

The ultimate objective

The ultimate objective is the elimination of organizational shortcomings, such as poor customer service, low productivity, or low profit.

Stage 2: Input

Explore all possible methods and techniques for training. Also, think about how you will design, manage, and deliver your course to your learners. Assess your company’s resources to figure out the most effective way to use them to achieve your objectives.

Stage 3: Reaction

Collect feedback from your learners about the course. Focus on three key areas:

- Program content

- Approach

- Value addition

Your goal isn’t just to find out if they liked or disliked the course but also to gather insights on any changes they suggest for the training program. Note their recommendations for future improvements.

Stage 4: Output

Now, it’s time to showcase the outcomes of the training through four distinct levels of measurement:

- Learner

- Workplace

- Team or department

- Business

Select the level that aligns with your evaluation’s objective and the resources you have.

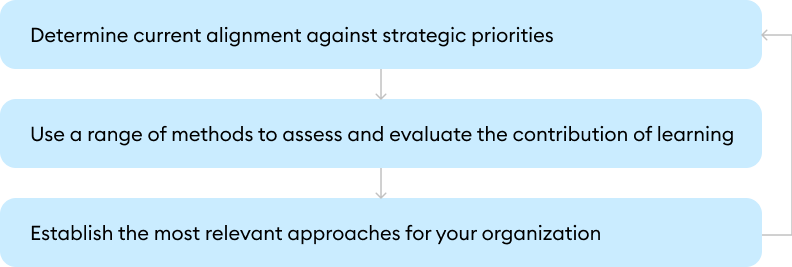

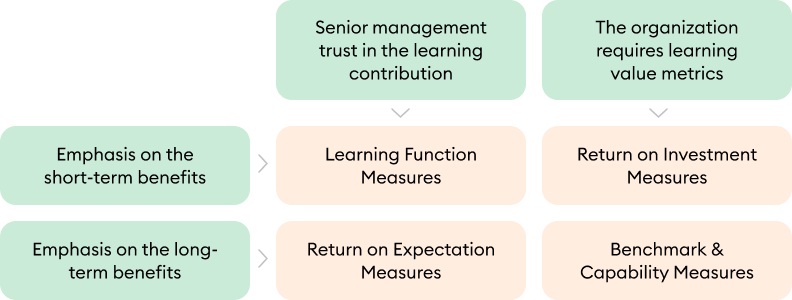

Anderson’s value of learning model

Unlike other models, Anderson’s Value of Learning model takes a broader perspective, concentrating on a company’s overall learning strategy instead of just a specific training program. It consists of three stages that help to identify the most suitable training strategies for your organization’s needs.

Stage 1

Determine if the existing learning programs align with your company’s strategic priorities.

Stage 2

The next step is to evaluate how learning influences strategic outcomes. A thorough data review helps identify progress, uncover gaps, and refine strategies for greater impact.

Stage 3

Select the most relevant approaches for your company depending on stakeholders’ goals and values:

- Emphasis on short-term benefits

- Emphasis on long-term benefits

- Senior management’s contribution to trust in learning

- The organization’s need for learning value metrics

Below is a table designed to help you identify the best approach for your organization.

You should choose a category that’s relevant to your situation and establish an approach that will help fulfill your organizational needs.

Training Evaluation Tools

Training evaluation tools are what you use to assess training programs. Key data collection methods usually fall into four categories: questionnaires, interviews, focus groups, and observations. We’ll also include an additional one — LMS reporting. To help organizations gain insights, it’s common to use these training evaluation methods together. Implementing AI can further enhance the evaluation process by automating sentiment analysis in surveys, transcribing and summarizing interview responses, and tracking engagement patterns during training sessions.

Now, let’s look at each one in greater detail.

Questionnaires

Questionnaires stand as the most frequently used method to gather valuable insights from participants. This tool is great for assessing learners’ reactions after a program.

Pros

- Enables the collection of a large amount of information

- Economical in terms of costs

- Saves time

- Reaches a broad audience

Cons

- Often results in a low response rate

- Might include unreliable responses

- Cannot clarify vague answers

- Questions can be interpreted subjectively by participants

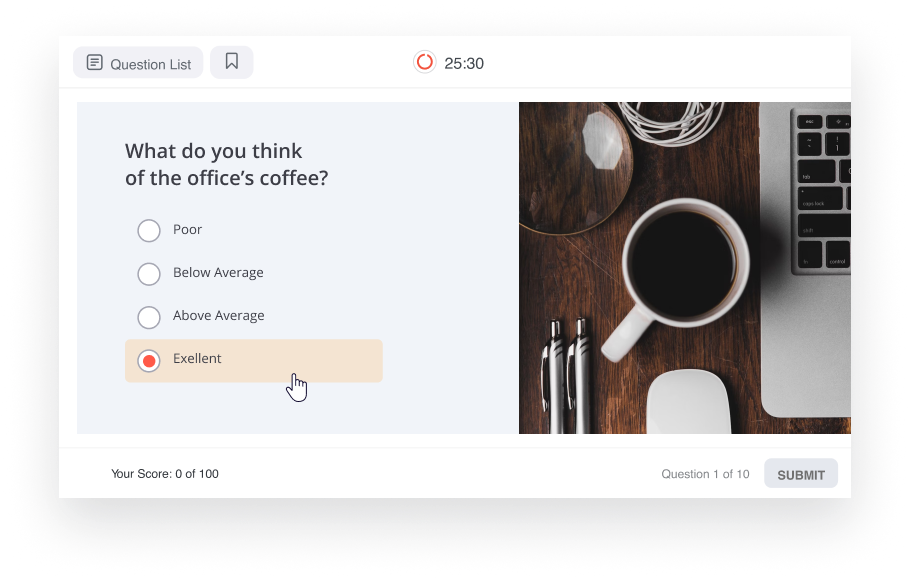

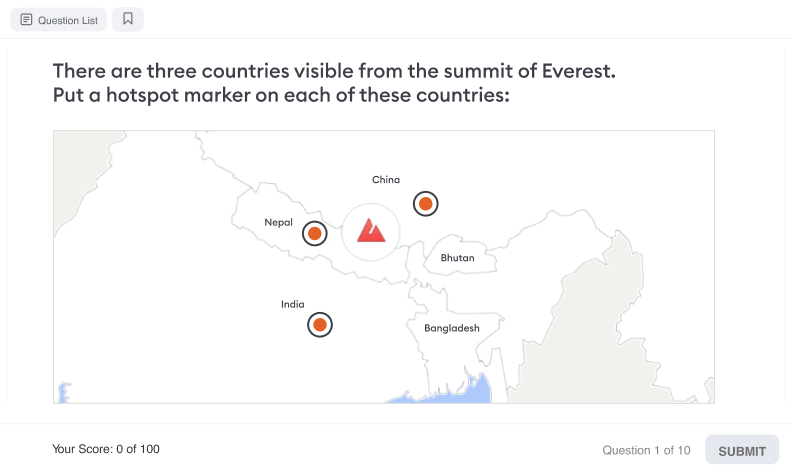

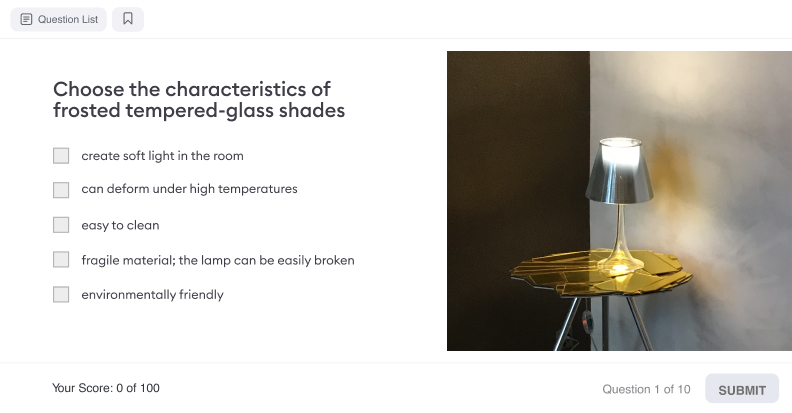

There is a broad range of software for creating and including quizzes and surveys in your training courses. For interactive, customizable, and engaging questionnaires, consider giving the iSpring Suite authoring tool a try. It enables you to design 14 different types of questions, add images to both questions and answers, and use many other features to assemble questionnaires that truly deliver results.

Moreover, iSpring Suite is also a powerful tool for creating courses directly in PowerPoint and enhancing them with quizzes, role-plays, screen recordings, and interactions. Take a look at this interactive module to get a sense of what you can do:

If you’re looking for more options, tools like SurveyMonkey and Qualtrics are also great for creating surveys and gathering feedback. They let you build custom questionnaires, analyze responses, and get insights to improve training programs.

Interviews

Interviews offer a deeper dive into employees’ attitudes, behaviors, and mindsets. They aren’t restricted to traditional face-to-face formats and can also be conducted over the phone or online.

Pros

- Provides an enhanced understanding of employees’ perspectives

- Allows you to ask clarifying questions

- Offers flexibility

Cons

- Requires a significant investment of time

- Limited reach, addressing learners one-on-one

AI-powered tools like Rev can help transcribe and analyze interview responses, making the evaluation process faster and more efficient.

Focus groups

If you need employee insights but lack resources for interviews, organize groups by job area, performance trends, or other relevant traits. Then, facilitate a group discussion to gather their reactions, insights, feedback, and recommendations.

Pros

- Gathers detailed feedback from multiple individuals simultaneously

- Allows for targeted questions to gain specific insights

Cons

- Requires a considerable amount of time

- Needs a team for management, including a moderator and an assistant

- Requires an environment that is conducive to open and honest communication

Observations

Observation stands out because it doesn’t depend upon what employees say about themselves or others. By simply observing someone at work, you can see firsthand if they’re applying new skills and knowledge. However, this approach does have its limitations.

Pros

- Cost-effective

- Offers a realistic perspective, free from opinion bias

- Captures valuable non-verbal information

- Can be implemented upon completion of the course

Cons

- Requires time, focusing on one individual at a time

- Might provide unreliable information, as people tend to improve their behavior when observed

- Observations can be misinterpreted

- Fails to uncover the reasons behind an employee’s attitude or behavior

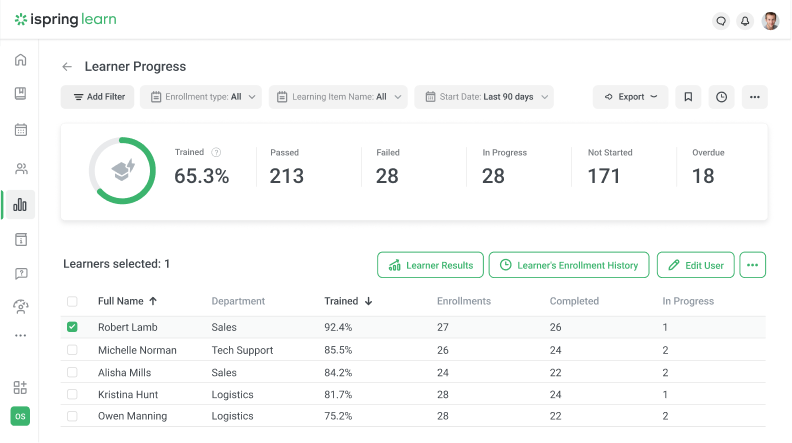

LMS reporting

A learning management system (LMS) is software for delivering online programs to your learners. Its LMS reporting feature collects and analyzes performance data to identify the weaknesses in your courses.

Pros

- Saves time

- Automates the evaluation process

- Offers objective feedback from the system

- Easily identifies weaknesses in the training program

- Available 24/7

Cons

- Limited to online programs

- Doesn’t delve into the reasons behind an employee’s attitude or behavior

Discover the advantages of an LMS and its reporting capabilities firsthand by signing up for a free trial of iSpring Learn. Beyond generating reports, the iSpring Learn LMS allows you to build courses, incorporate gamification into your eLearning projects, automate routine tasks, and much more.

When Is the Best Time to Evaluate Training?

You can evaluate your training programs either before or after delivery using formative and summative methods. While a comprehensive approach is ideal, limited resources may not always allow for both. Still, even focusing on one evaluation type can provide valuable insights and enhance your training process.

Before training launch (formative)

Formative evaluation helps you identify and resolve issues in your course before it reaches learners. For instance, it might take the form of inviting a focus group or a subject matter expert to review the training to uncover any potential weaknesses or mistakes.

After training completion (summative)

Summative evaluation involves methods like surveys, interviews, and tests to gather feedback from participants. This feedback enables you to refine the program for future learners.

To Sum Up

There are many training evaluation methods and tools, each with unique benefits. Instead of looking for a single “best” approach, combine different models to align with your company’s goals. The right tools make this process easier and more effective.

Evaluate with iSpring Learn to turn insights into measurable improvements and create training programs that drive real results.